The future of voice assistants: a personal digital clone?? Part 1

What Google Duplex tells us about the future of voiceThis article is a part of the Battery001 issue: An issue dedicated to Voice!

Recently I published an article about what is called Conversational AI. The term refers to our beloved voice assistants: Alexa, Siri, Google Assistant, none of them famous for their conversational skills.

From the article:

We will get better and better at mimicking human behavior and suddenly we have created something that passes not only the Turing test but also get our emotional and intellectual approval to become a part of the conversation.

And when we get there, what will we talk about?

It turns out to be haircuts and dinner bookings!!

The demo of Duplex at Google I/O 2018 left me with two very contrasting feelings. The first was, wow, super impressive, the second was, is this really solving the right problem?

I get the idea that some businesses don’t have any online booking. Another solution to that problem would be to give them that for free, but whatevs.

Now Google are rolling out the Duplex functionality and we get the taste of how it will be to use. This is how to make a reservation:

and here is what happens on the other side:

Two videos and again two different feelings. The last one, super impressed! The first, well…

Making Google Assistant do the booking took 75 seconds, felt awkward and quite far from a natural conversation, requires 8 screenshots and a lot of textto describe. The actual booking was very smooth and took 35 seconds.

As a tech demo it is very impressive but there are far better use cases. Swapping roles, the human is calling a business and Duplex books appointments seems like a simple change which would solve real world problems for a lot of small and medium sized companies. Then replacing all those extremely annoying IVR phone systems, then replacing support, then sales, then…

While self driving cars are not around the corner as far as I can see, this will start to replace humans pretty soon. The implications are profound.

Looking under the Duplex hood (the layman version)

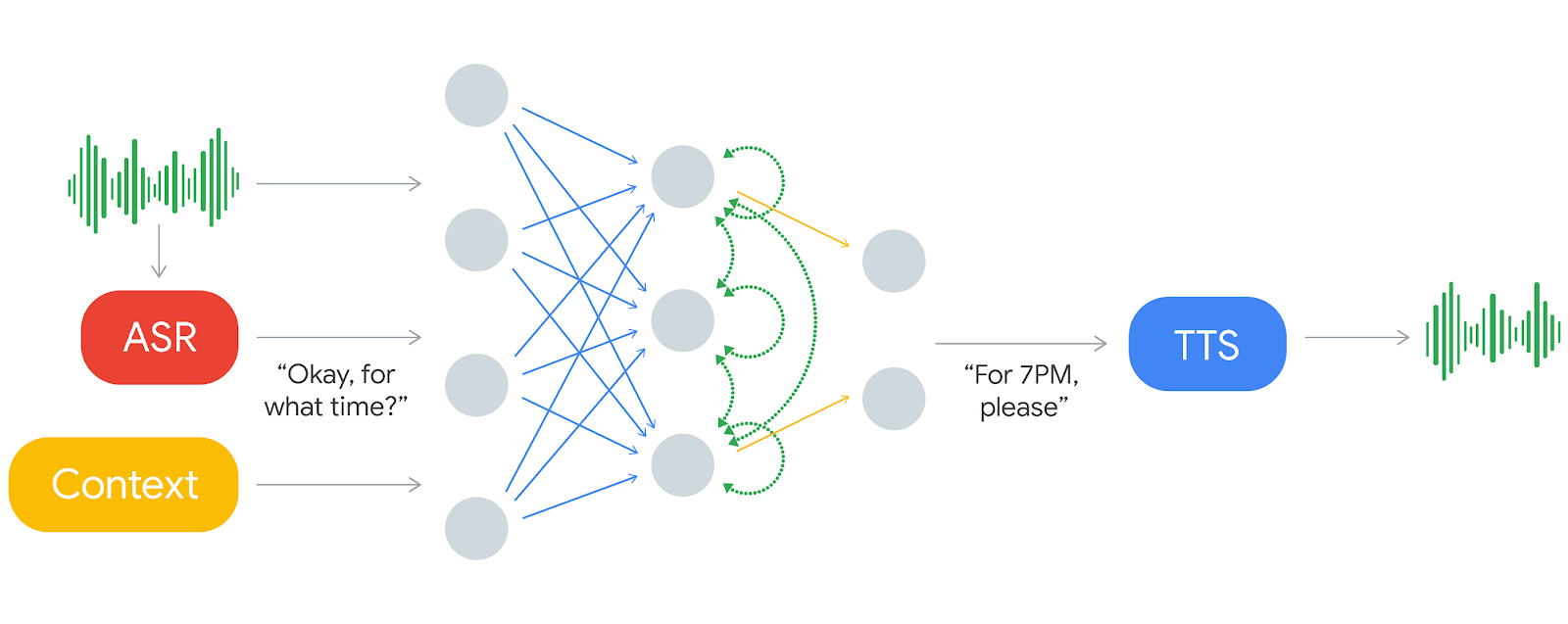

There are three components involved in the process: converting the user’s speech to text, analyzing that text to find a proper response, and generating speech from that response.

The speech recognition part seems to work flawlessly! The generated speech blows my mind! It is expressive and has a natural flow and intonation. But it is the component in the middle where the real magic happens: that takes what the user said and finds a proper response.

Duplex works autonomously, i.e. without any human involvement, and has been trained for this task, and this task only. From the Duplex post on Gooogle’s AI blog:

One of the key research insights was to constrain Duplex to closed domains, which are narrow enough to explore extensively. Duplex can only carry out natural conversations after being deeply trained in such domains. It cannot carry out general conversations.

Booking a restaurant or a haircut are both clearly defined in scope, the number of paths the conversation can take and the number of data types, e.g. how many, what time, is limited. The scope is much bigger than it seems, but not infinite. So all in all, it seems doable, and obviously is, since they did it!

But what does “training” mean?

I never thought I would write about artificial neural networks but here we go!

An artificial neural network is like a magical black box (yes, really!), each with its own specific function. As an example, let’s pick one with a soft spot for cats. Show an image of a cat to the black box and it confirms, this is a cat ❤️. Show a hot rod racer and the black box gives the verdict: no cat 👎.

In order to do its magic the black box has to be “trained”. In the beginning it knows nothing, we show a cat (obviously not a real cat, just the pixels!), it cannot even spell cat but gives an answer anyway. Each time it gives an answer it gets feedback on whether it was right or wrong. This way it “learns” and gradually gets better at recognizing cats. At some point we decide that it does the job good enough and we end the “training”.

“Training” and “learning” sounds, well, human, like there is some living thing in the black box. There isn’t, it really seems magical but it is “just” math, a statistical application, and it doesn’t “learn”, it calibrates itself based on feedback on its performance.

The really weird thing is that we do not fully understand what is happening inside the box. Put in other words:

Artificial neural networks are simply deterministic algorithms that statistically approximate functions. It’s just not possible to exactly say which function they approximate.

We have created the application but we cannot peek inside.

It performs its magic anyhow. A magical cat black box.

“Training” Duplex

Over to Duplex. Instead of showing cat and hot rod car images, they use real conversations when humans are booking a dinner or haircut. The black box is trained in a similar way but now it is text in and text out. If the user says a specific sequence of words, then the black box should reply with another specific sequence of words.

To begin with the responses are totally off, but by comparing them with the answer in the real conversation the black box “learns”. At some point it has become good enough, a magical haircut booking black box, hello Duplex!!

This is obviously not the whole truth, some of it I knowingly omit, most I am blissfully unaware of. But that is how deep (!) we get here and now.

So is Duplex the future?

In one way it is, it shows that it is possible to mimic human conversations in a way that is almost indistiguishable from the real thing. Duplex has so far a very limited scope, haircuts and dinners, but the method is applicable on many use cases. I am sure we will see a large number of new applications in the coming year.

So is the assistant of the future just the sum of all these applications? The data and processing needs will approach infinity, should we just hope that Moore’s law applies?

There are other concerns, such as privacy. The more useful an assistant becomes the more personal it gets. Do we want Google, Apple, Amazon to listen in to our most private conversations and apply their generalized models that has been “improved” by supervised training?

This is the topic of part 2, don’t miss out, it will get super futuristic!

This article is a part of the Battery001 issue: An issue dedicated to Voice!